We are on the brink of Industry 5.0, where AI will completely transform every business practice, and Large language models have emerged as transformative tools in this evolving landscape.

These advanced AI systems are capable of understating and generating human-like text and are revolutionizing various sectors by enhancing efficiency, improving customer engagement, and streamlining operations.

As organizations strive to remain competitive, the challenge of LLM cost management has come to the forefront. It has become crucial for businesses to set clear objectives before leveraging large language models (LLMs).

According to the Gartner report, 30% of Gen AI projects will be abandoned after POC by the end of 2025. These statistics underscore the complexity and variability of costs associated with implementing LLM models in organizations.

Cost is a crucial factor that concerns every organization. Whether they want to deploy or utilize LLM applications today, the pressing question remains – how much will it cost?

That’s why careful financial planning and risk assessment are being emphasized to eliminate the hefty cost associated with the utilization of LLM-powered applications.

In this blog, we will discuss ten essential cost-reduction strategies for businesses to manage LLM costs efficiently. These best practices will help organizations maximize their investment in LLM technology while minimizing operational costs.

What Are LLM-Powered Applications?

LLM-powered applications are software solutions that leverage large language models to perform a variety of tasks involving natural language processing (NLP).

These applications utilize the capabilities of LLMs to understand, generate, and manipulate human languages. LLMs are trained on vast amounts of text data, enabling them to perform tasks like text generation, summarization, translation, question-answering, and more.

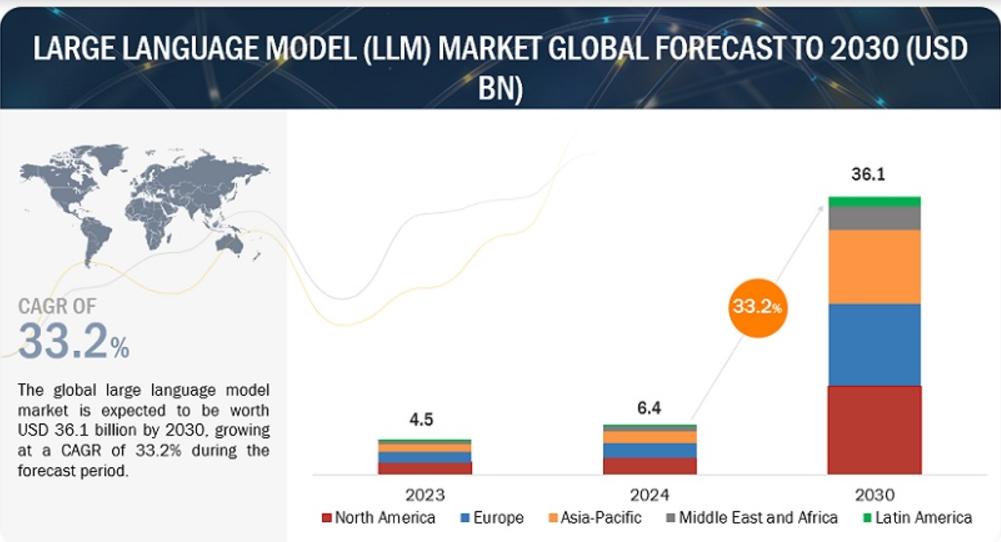

The global LLM market is expected to reach USD 6.4 billion in 2024 and USD 36.1 billion by 2030, growing with a CAGR of 33.2%.

LLMs are very good at understanding compressed and abbreviated text. By embedding LLMs, these applications can enhance automation, personalization, and user engagement.

Let’s explore its key characteristics:

- Natural Language Understanding

LLM-powered apps can interpret complex human language inputs, whether written or spoken, facilitating more intuitive interactions.

- Text Generation

LLM applications leverage advanced machine learning techniques to process vast amounts of text data, enabling them to understand context, tone and intent.

- Contextual Awareness

Modern LLMs are able to retain and adapt to the context of a conversation or task, offering responses that reflect the nuances of prior interactions, which is essential for building coherent and engaging user experience.

- Multitasking

LLM-powered apps can perform a range of tasks simultaneously, such as answering questions, providing explanations, drafting emails, and helping users make decisions based on inputted data.

Why Does Cost Efficiency Matter For LLM Applications?

Cost is the crucial factor in the development, deployment, and maintenance of LLM-powered applications. These models are highly capable and offer advanced functionality, but they also come with significant financial and resource requirements.

Achieving cost efficiency for LLM applications ensures that these advanced tools remain scalable, accessible, and sustainable for businesses and organizations.

Here is why cost efficiency matters in LLM applications:

1. High Computational Demands

LLM applications can spot patterns a thousand times better than traditional applications because of massive computational power. This process requires running thousands of GPUs (Graphics processing units) or TPUs ( Tensor processing units) for weeks or even months. This leads to substantial energy consumption and infrastructure costs.

Even after the model is trained, using LLMs for interference requires considerable computational resources. Running queries on these models can incur ongoing cloud service costs. That’s when an organization understands the need for cost-efficiency, which means optimizing the infrastructure, reducing energy usage, and making smart choices about when and how to use LLMs to balance performance & expense.

2. Scalability and Accessibility

Businesses that deploy LLM applications at scale must understand the cost factor to make these tools accessible to a large number of users without compromising performance. With scalable solutions, as the demand grows, so do the costs associated with running these applications.

That’s when it becomes challenging for startups or organizations with tight budgets to adopt LLM technology without compromising quality. Without cost-effective strategies, businesses face limitations in expanding their services or may need to pass the high cost onto customers to make the product less competitive.

3. Operational Efficiency

LLMs are not a one-time investment. To curate accurate outputs, you need to fine-tune and monitor them to maintain their relevance, accuracy, and effectiveness. Managing these ongoing operational costs effectively is essential for the long-term viability of any LLM-based solution.

Companies that fail to control these expenses could face challenges in maintaining their systems, reducing service quality, or competing with others who have optimized their cost structures.

4. Cloud Computing Costs

Many LLM-powered applications rely on cloud platforms for both training and inference, which involves pay-per-use pricing models. The cost of cloud services, especially for resource-intensive heavy LLM applications, can escalate quickly.

That’s when essential cost-reduction strategies come into play. Finding efficient ways to use these cloud resources, such as using smaller models for specific tasks or optimizing how requests are routed to the cloud, can reduce overall expenses.

5. Cost-effective Alternatives

While the biggest LLMs, such as GPT-4, are extremely powerful, there are lighter, smaller, and more specialized models that can perform similar tasks at a fraction of the cost.

By choosing a suitable model for specific use cases, organizations can significantly reduce operational expenses. This is especially true when a full-scale LLM isn’t necessarily used for specific tasks, allowing for cheaper, more efficient options.

6. Competitive Advantage

With time, LLM-powered solutions have become more common, and cost-efficiency can provide a competitive edge. Companies that provide high-quality AI experiences while keeping costs low can attract a large consumer base.

Because of this, companies, before investing in LLM-powered solutions, need to focus on cost-reduction strategies. Both aspects of innovation and value for money are critical for creating a market presence in this competitive landscape.

What Are The Essential Cost-Reduction Strategies For Businesses Using LLM-Powered Applications

Businesses are increasingly turning to large language models (LLMs) to enhance their operations and drive innovation. However, the cost associated with maintaining and implementing these LLM models is significant.

This is why it’s crucial to adopt cost-reduction strategies to harness the full potential of LLMs while minimizing expenses. Whether you’re a small startup or a large enterprise, these strategies will empower you to optimize your processes and drive sustainable growth in an ever-evolving market.

Let’s explore ten proven cost-reduction strategies for businesses using LLM-powered applications.

1. Optimize Model Selection and Usage

While working with LLM-powered applications, you can easily get caught up in the hype around choosing the most powerful model. And if you get caught in this hype, the result is a hefty price.

So, the most impactful strategy for LLM cost management is selecting the right model. It is not necessary for every application to require the most advanced or powerful model.

Selecting a model that balances performance and cost often delivers better results than the larger models. Using simpler models may suffice for specific tasks and save money. It also benefits from faster processing power and reduced computational resources.

2. Implement Efficient Fine-Tuning Techniques

This is the most powerful technique when it comes to effective LLM cost management. Using large language models doesn’t fit one size at all, which is why white off-the-shelf models are capable of handling a wide range of tasks. But they are not cost-effective for your specific case.

In that case, fine-tuning is what comes in. It is the process of adapting a pre-trained LLM model for a specific task or domain instead of training from scratch. With this process, you can create a customized model that suits your specific requirements.

Suppose you are running a customer support chatbot for a tech-based company. That general LLM model can only handle common queries and struggles with more product and tech-specific questions. This will lead to longer conversations, which will eventually lead to token consumption.

Fine-tuning the model on a data set of previous interactions, you can create a chatbot that can understand and respond to more tech-related queries. This results in quicker resolution, less token consumption with each conversation, and minimum usage of resources.

3. Use Open-Source Alternatives When Feasible

Another cost-effective strategy is using open-source alternatives. Open-source models present a viable and cost-effective option for businesses looking to leverage LLM capabilities without incurring high licensing fees.

Organizations can avoid the financial burden associated with proprietary models, which often come with expensive usage fees. They can modify and adapt open-source models to meet specific business needs without the restrictions that often accompany proprietary solutions.

Not only this, but it also benefits from a vibrant community of developers who contribute to the improvement and maintenance of open-source models, providing ongoing updates and enhancements.

4. Deploy LLMs on Cost-Effective Cloud Platforms

When it comes to deploying LLM, choosing the right cloud service provider, such as AWS, GCP, or Microsoft Azure, is vital as they provide various sets of tools for managing AI workloads.

Selecting the right set of cloud providers that offer the best pricing structure based on expected usage and performance needs is crucial for managing costs effectively.

Moreover, data security is also a crucial factor that needs to be taken care of before deploying LLM on a cloud. Many cloud providers offer free tiers with limited resources, which can be beneficial for testing and development before scaling.

Take advantage of reserved instances or long-term commitment discounts to lower the overall cost of cloud resources.

5. Integrate Token Optimization Strategies

Token usage can significantly impact costs when working with LLMs.They are the basic unit of input and output for language models. When a user provides an input prompt, it is tokenized and broken down into individual tokens before being processed by the model.

Now, the number of tokens in your prompts can directly affect the computational resources required by LLM. The more tokens, the higher the processing demand, which results in increased expenses and longer response time.

Streamline input data (prompts) to reduce the number of tokens processed, ensuring that only essential information is sent to the model. Use algorithms to compress text data before sending it to the model, which can help decrease the token count and associated costs.

6. Prioritize Batch Processing Over Real-Time Queries

Batch request processing can lead to substantial cost savings, especially if you run a self-hosted model. Instead of processing requests individually, combine multiple queries into a single batch to maximize resource utilization.

This approach has two benefits. First, run less urgent processes during off-peak hours. Second, it benefits from lower costs and reduces server load.

7. Leverage Hybrid AI Models

Hybrid models can optimize performance while controlling costs. Use traditional AI models for simpler tasks and reserve LLMs for more complex queries, balancing efficiency and expense.

Implement specialized models tailored for specific functions, which can be more cost-effective than relying solely on a single LLM for all operations.

8. Implement Usage Monitoring and Budget Caps

Monitoring usage is essential for effectively monitoring costs. Track resource consumption by utilizing monitoring tools, find patterns, and identify areas for optimization.

You can set budget limits and establish budget caps to prevent unexpected overspending, ensuring that costs remain within manageable limits.

9. Adopt Serverless or Edge Computing for Deployment

Serverless and edge computing models can provide significant cost advantages. With serverless architectures, costs are incurred only for the compute time used, which can lead to substantial savings for fluctuating workloads.

Deploying models closer to data sources through edge computing can minimize latency and reduce the need for extensive data transfer, further lowering costs.

10. Regularly Update and Retrain Models

Keeping models up to date is crucial for maintaining efficiency and effectiveness. Schedule periodic updates to ensure models incorporate the latest data and improvements, which can enhance performance and reduce operational costs.

Adopt techniques that allow models to learn from new data without full retraining, saving time and computational resources while keeping the model relevant.

Also Read: How To Build An AI App?

Real-World Examples Of Successful Cost Reduction Strategies For Businesses Using LLM-Powered Applications

In this competitive landscape, organizations are continously seeking innovative ways to enhance their business efficiency and reduce costs. Businesses across various sectors have successfully implemented LLM-powered solutions to achieve significant cost savings.

If you are someone who wants to achieve the same, explore these real-world examples of successful cost-reduction strategies for businesses using LLM-powered applications.

1. Customer Support Automation In eCommerce

For real-life examples, Shopify-integrated LLM-powered chatbots can automate customer queries more quickly and efficiently.

You can also deploy AI chat assistants to handle common inquiries such as order tracking, refund processes, and product recommendations.

This will not only reduce reliance on large customer service teams but, at the same time, decrease 30% of operational costs.

2. Automated Content Generation For Marketing

LLM-powered applications are used for marketing to generate SEO-optimized blog posts, social media copy, and marketing emails.

Hubspot uses AI-driven content tools to streamline content creation. When you use these tools, you can lower the need for external copywriting services, reducing content production costs by 40% and expediting campaign launches by 50%

3. Financial Document Analysis In Banking

LLM AI applications are used to review legal contracts and financial agreements for compliance and risk factors. The JPMorgan Chase has successfully implemented LLM-powered tools for contract analysis.

It has reduced manual effort by up to 360,000 hours annually, saving millions in operational costs while improving the accuracy of contract reviews.

4. Streamlining Recruitment Processes In HR

Large learning-powered applications (LLMs) offer a range of benefits for businesses looking to improve their hiring processes. These models can analyze vast amounts of data and identify the most qualified candidates quickly, reducing the risk of making bad hires.

This accelerated hiring processes by 70%, significantly reducing recruitment agency fees and internal manpower costs.

5. Inventory Management Optimization In Retail

LLM applications, along with machine learning algorithms, are used in the retail sector to optimize inventory. It is used to predict inventory needs based on historical sales data and customer trends.

Walmart has employed an AI-powered predictive model for inventory control. It not only minimized overstocking and understocking issues but reduced storage costs by 25% and improved supply chain efficiency.

6. Enhanced Fraud Detection In Finance

LLM can analyze vast amounts of transaction data to find anomalies and patterns. The Fintech sector can detect fraudulent activities early before they escalate.

PayPal uses LLMs for real-time fraud detection. You can implement AI models to analyze transaction patterns and flag suspicious activities. It will reduce fraud-related losses while cutting operational costs for manual fraud investigation.

7. Training and Upskilling In Corporations

Many organizations are leveraging LLM-powered applications for employee training. They have created personalized learning modules and instant feedback systems to upskill employees.

This has reduced the training program costs and enhanced learning efficiency. Google has adopted LLM-powered platforms for employee training.

8. Automated Customer Feedback Analysis In Telecom

The telecom industry leverages LLMs to process and categorize customer complaints and feedback for multiple channels. This process has reduced manual analysis costs and improved customer retention rates by responding to concerns more efficiently.

AT&T is a well-known telecommunication provider in the US. They have successfully adopted LLM-powered applications to streamline their customer service operations, focusing on analyzing customer interaction and feedback.

Why Consider EmizenTech For Your LLM-Powered Application Development?

Large language models have become pivotal in transforming how businesses operate, enabling advanced capabilities such as NLP understanding, automated content generation, and enhanced customer interactions.

Their ability to process and analyze vast amounts of data makes them invaluable for improving efficiency and gaining competitive advantages across various industries.

Developing LLM-powered applications is essential for organizations aiming to stay ahead in the digital landscape. These applications can streamline operations, enhance user experiences, and provide actionable insights.

As a leading AI development company, EmizenTech specializes in LLM-powered application development, delivering tailored solutions that meet business needs. With expertise in leveraging LLM models like OpenAI’s GPT, we ensure smart, scalable, and efficient applications that drive user engagement and streamline workflows.

However, successfully implementing LLMs requires specialized expertise, tailored solutions and a deep understanding of the technology to maximize their potential while managing costs effectively.

We at EmizenTech are committed to helping businesses unlock their full potential of LLMs. Our team of experienced developers and AI specialists collaborated closely with clients to design and implement customized LLM-powered applications that meet your business needs.

We focus on model selection, fine-tuning, and deploying cost-effective solutions on leading cloud platforms. Choosing EmizenTech for LLM-powered application development will not only drive growth but also provide sustainable value in the long run.

Conclusion

LLM-powered applications will continue to shape various industries. Effective cost management strategies become essential for long-term success.

With these ten effective cost-reduction strategies, businesses can leverage LLM-powered applications and harness their full potential while keeping expenses in check.

Choosing the right set of models or utilizing a cost-effective cloud platform will significantly reduce operational costs.

Remember that cost management is a continuous process that requires proper analysis and adaptation. By strategically managing costs associated with LLM-powered applications, businesses can not only enhance their operational efficiency but also drive innovation and growth in this competitive landscape.

Organizations that are conscious of cost management practices will ultimately be positioned for sustained success in the future.

Frequently Asked Questions

What are the primary costs associated with LLM-powered applications?

The primary costs of LLM-powered applications include cloud computing for training and inference, API usage fees, storage for datasets, and development expenses for integration and fine-tuning to meet specific business needs.

How can small businesses benefit from LLM cost-reduction strategies?

Small businesses can save time and labor through automation, enhance customer engagement, gain valuable insights efficiently, and achieve scalability as they grow.

What tools can help monitor the cost efficiency of LLM applications?

Tools like AWS cost explorer, Azure cost management, New Relic, Datadog, and budgeting software such as QuickBooks can help monitor cost efficiency.

Is it better to build or buy an LLM-powered solution?

The decision depends on budget, time to market, customization needs, and available technical expertise. Buying is often faster and more cost-effective, while building allows for tailored solutions.

How can EmizenTech help optimize LLM-powered applications for cost reduction?

EmizenTech offers custom development, seamless integration services, performance optimization, and effective cost management strategies to help reduce ongoing expenses.